500K pps with tokio

After reading the cloudflare blog post on how to receive 1M packets per second, I wondered: How fast can we go with rust and tokio?

Scenario

For my game server, I want to be able to receive many small UDP packets. Lots of them. Since typical updates in my case are only a few bytes (say x and y coordinates, a uid and timestamp totalling to say 32 bytes), a gameserver will reach its processing limit before even getting close to saturate the bandwidth of its uplink.

For this experiment, let’s assume we bind on one IPv4 UDP socket that receives all our game traffic. Further, let the received messages be sent to another task for processing (here: incrementing a counter and ignoring it).

Tokio

Tokio describes itself as an ‘asynchronous run-time’, in other words: it does provide means for running code annotated with those nice async keywords.

Since tokio has deep roots in the network programming environment, it supports network primitives as first-class citizens. This allows us to use non-blocking code to read and write from the network.

So let’s use tokio by adding it to the cargo.toml with all features:

[dependencies]

tokio = { version = "0.2.10", features = ["full"] }

The client

Now to the client, its only job is to send lots of updates at a roughly fixed rate. You can find the full code in the github repo

Since we use tokio, we can directly tell it, that our main function will by executed asynchronous:

#[tokio::main]

async fn main() -> Result<(), Box<dyn Error>> {

//...

}

Then we need a udp socket to send our messages with;

let mut socket = UdpSocket::bind(local_addr).await?;

socket.connect(&remote_addr).await?;

Now a bit of a payload, in this case a single i32 in a struct. Since we don’t care about those updates, we will send the same update over and over again:

let update = MovementUpdate{

id: 7

};

let encoded = bincode::serialize(&update).unwrap();

Now, in a loop, we send the serialized update a few million times:

socket.send(&encoded).await?;

The server

The first server should perform the following steps:

- Accept a packet

- parse it

- increment the counter

- send it to the worker task for processing

Since the full code is in the repo, I will only highlight the interesting parts here.

We spawn our two async fns from the main function, that itself is not async. Spawning happens on a created runtime:

let (tx,rx) = mpsc::channel(100);

let handler = rt.spawn(rcv_pass_handler(opt.clone(),rx));

let receiver = rt.spawn(rcv_pass(opt.clone(),tx));

let join_handler = join_all(vec![handler,receiver]);

rt.block_on(join_handler);

The rx and tx channel parts are for communication between the tasks.

The rcv_pass function is looping over the following code to receive, parse and forward the datagrams.

let _res = socket.recv_from(&mut buf).await.unwrap();

let packet: MovementUpdate = bincode::deserialize(&buf[..]).unwrap();

tx.send(packet).await.unwrap();

(Sorry for the unwrap but here I don’t care)

The handler function is looping over the other end of the channel and incrementing a counter, printing its calue from time to time:

while let Some(packet) = rx.recv().await {

msg_ctr+=1;

// print from time to time

}

And that’s it, now have a look at the results.

Results

That’s what I found when running the code, ymmw.

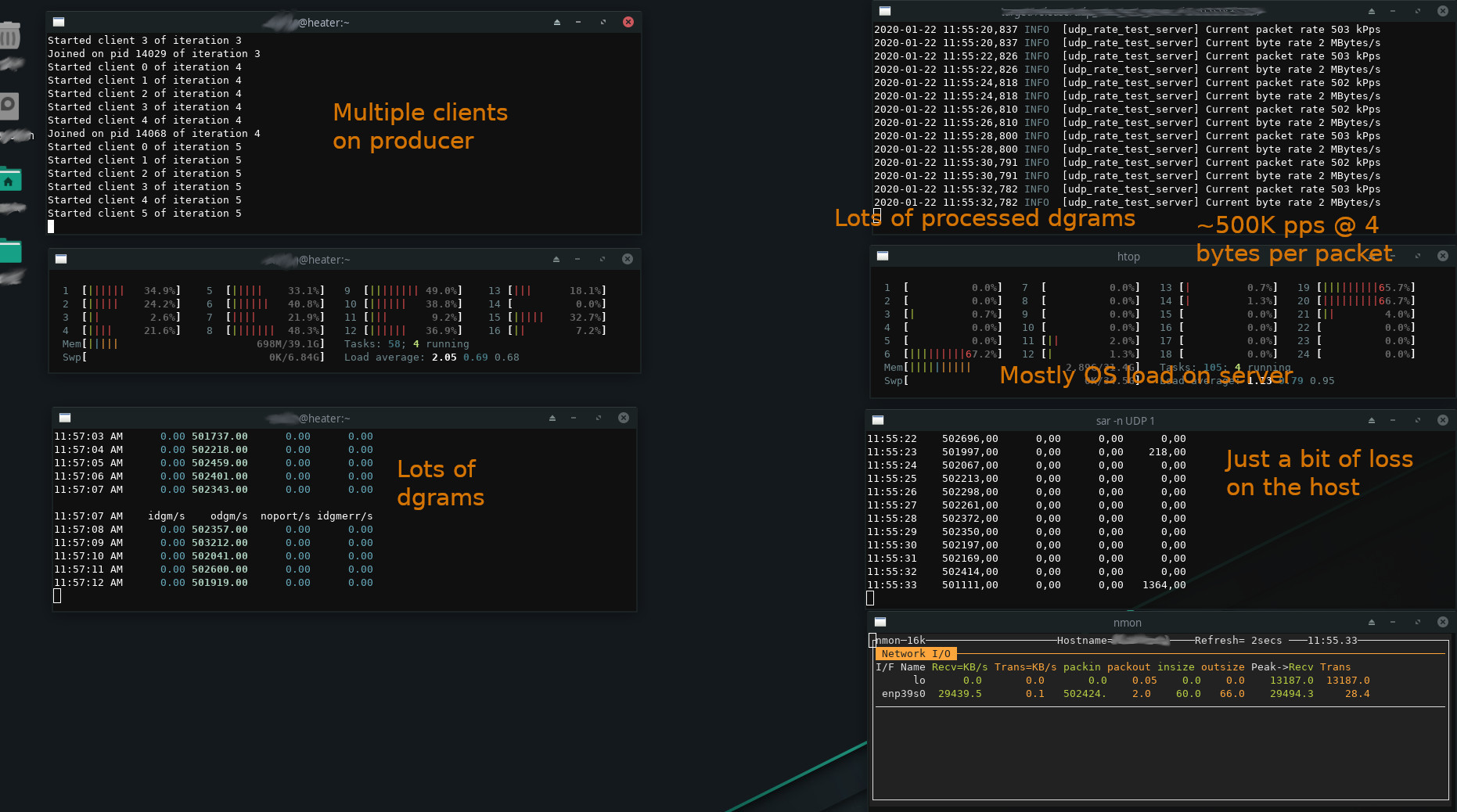

A screenshot when running on multiple hosts

Same host

When running client and server on the same host, my machine could achieve about 700Kpps. This is good to know but has no relevance for any practical use cases except perhaps some IPC.

Different hosts

tl;dr: The maximum rate was 500Kpps.

To get closest to the use case, the server was run on a machine with decent power and the clients were run at several other hosts, everything connected via a gigabit ethernet switch.

Running any amount of clients with sufficient send rates capped at 500Kpps. But what was limiting throughüut here?

When running two independen isntances of the server, the total packet rate was, again, about 500Kpps. I therefore conclude that either the OS or the hardware is limiting the throughput.

If someone has a winows machine, it would be interesting to see if there is any difference in performance.

Conclusion

Rust and tokio are fast. Like, really fast. But more important, they are elegant, they allow you to things at the speed of C++ without having to care about all the tiny details. Combine that with the ergonomic features like the async functions, and you have ma favourite programming language.